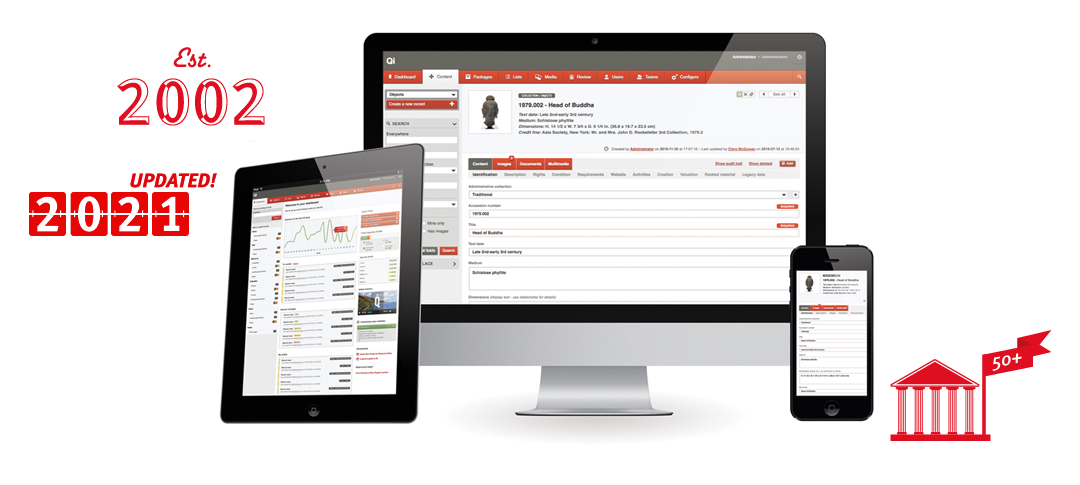

Latest projects

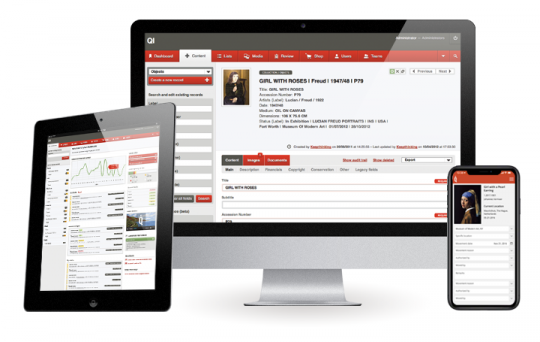

Keepthinking uniquely combines a digital design agency with software development for Museums, Galleries, Archives, Libraries, Performing Art Venues and other cultural organisations. We offer standard compliant Content and Collection Management software as well as bespoke design, consultancy and development for any web or mobile application.